Imagine a startup where a 20-person team can generate $100 million in annual recurring revenue in less than two years. This isn’t a futuristic fantasy — it’s the unfolding reality of AI-powered growth. Companies like Cursor, Bolt, Lovable, and Mercor are shattering traditional scaling rules, proving that lean teams armed with intelligent agents can disrupt entire industries.

What this reveals is a shift in startup dynamics. Founders are no longer constrained by headcount or conventional development cycles. As AI agents move from early successes to becoming a foundational part of modern software, they are driving a shift in infrastructure needs.

This shift follows a familiar pattern: As new applications emerge, they push the limits of existing infrastructure, creating demand for new capabilities. In turn, advances in infrastructure unlock even more powerful applications, fueling the next wave of innovation. AI agents are no different — their rapid adoption is reshaping both how software is built and the infrastructure that supports it. For founders, this presents a massive opportunity: Building the AI-native infrastructure — spanning tools, data, and orchestration — that will power this next wave of intelligent applications.

In this new era, every founder faces a pivotal choice: Adapt to the transformative power of AI or risk falling behind. The surge in agent adoption isn’t merely a trend — it’s a fundamental reimagining of how software is built, deployed, and scaled.

From Efficiency to Infrastructure: A Deeper Signal

It isn’t just about efficient growth — this transformation signals something deeper. As clear patterns for successful agent applications come into view, founders are rushing to embrace and extend what works. This surge in agent adoption is putting new demands on the infrastructure layer that supports these applications.

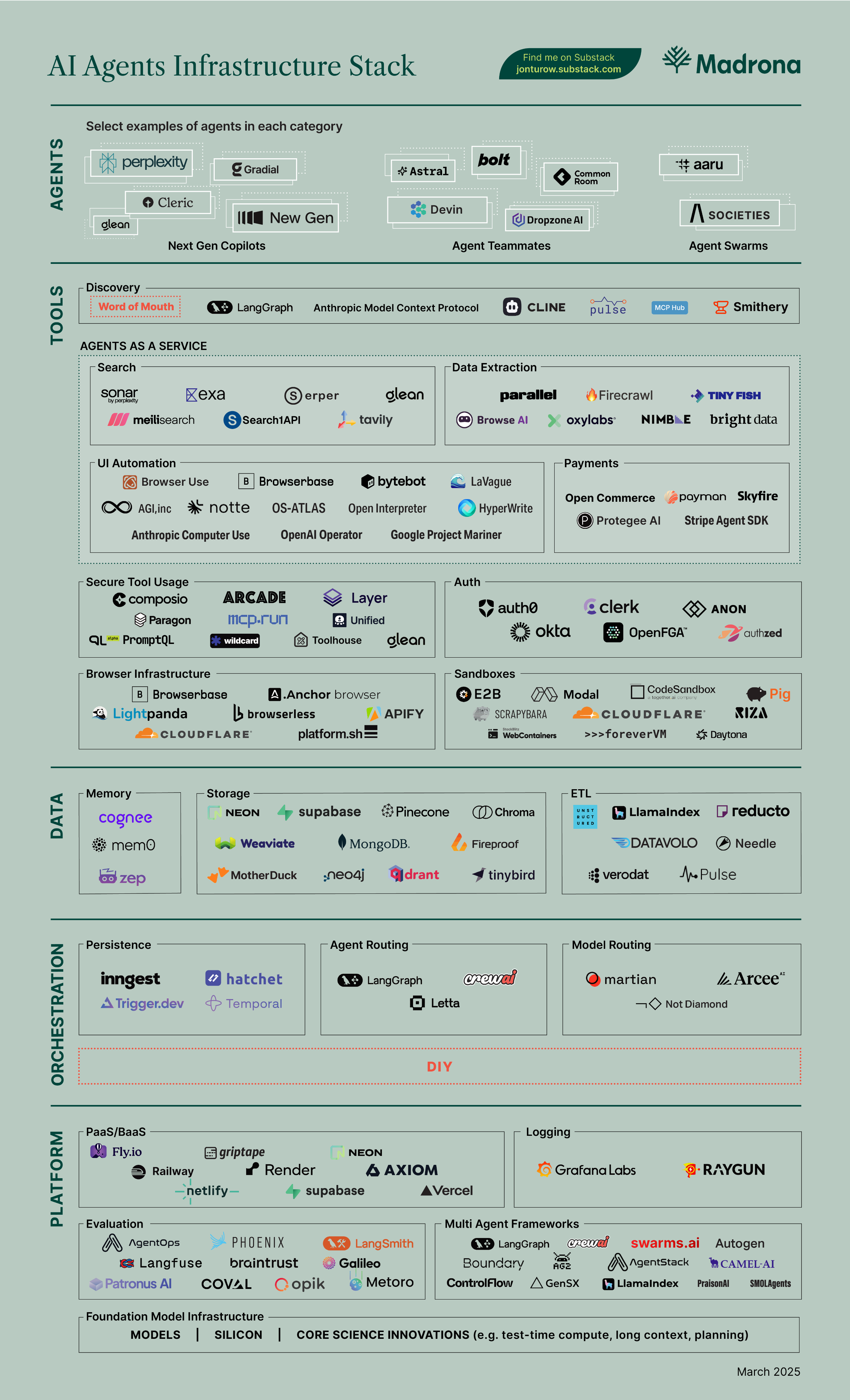

We’ve been tracking this evolution since “way back” in June 2024, when we wrote about “The Rise of AI Agent Infrastructure.” Back then, the landscape was sparse, dominated by DIY solutions as developers prioritized flexibility to experiment with different approaches. There was a broad group of new companies building infrastructure for the future state of agents, but such companies faced a moving target. Today, that picture has changed dramatically.

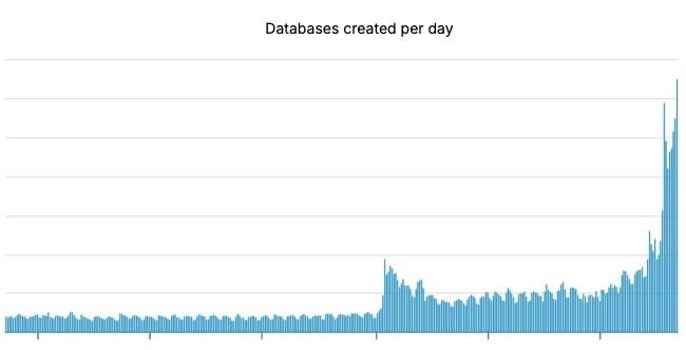

The evidence is everywhere: The serverless Postgres provider Neon reports that AI agents are now creating databases on its platform at more than 4x the rate of human developers, creating an acceleration in Neon’s overall metrics:

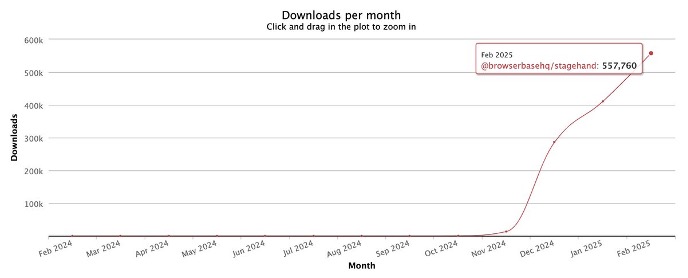

Specialized AI agent infrastructure components like Browserbase are seeing similar acceleration. For example, Browserbase’s Stagehand UI automation library recently crossed 500K monthly npm installs.

These aren’t just growth metrics — they’re signals of a fundamental shift in how software is built and deployed.

This transformation presents opportunities both for agent applications and the infrastructure that supports them. And contrary to the old adage that “by the time there’s a market map, it’s too late,” we’re seeing companies founded in 2025 already gaining significant traction in this space. The underlying capabilities and patterns are evolving so rapidly that new entrants can quickly identify and address emerging needs.

From Experimentation to Clear Patterns

A year ago, agent development was characterized by extensive experimentation. Developers built largely from scratch, prioritizing flexibility to pivot as they searched for winning approaches. Today, the picture has changed dramatically. Multiple repeatable patterns have emerged, and smart founders are rushing to embrace and extend what works.

These patterns build on what we identified in our January analysis of the agent landscape, showing how a set of common agent patterns is coming into view: Next-gen copilots are context-aware assistants that proactively aid with complex tasks, exemplified by Bolt.new, AirOps, and Colimit. Teammate agents, such as Ravenna, Sailplane, and Basepilot, take this a step further by autonomously driving multi-step workflows forward. Agent organizations introduce collaborative systems where multiple specialized agents work together, as seen in Aaru’s probabilistic simulations. The next category, agents as a service, provides specialized agent capabilities as developer-facing services rather than user-facing products.

All of this has implications for the infrastructure stack pictured above.

Don’t Miss Jon Turow’s conversation with Databricks

Chief AI Scientist Jonathan Frankle.

The Infrastructure Stack Emerges: Three Defining Layers

As agent patterns solidify, three layers of the AI agent infrastructure stack have emerged as particularly defining: Tools, Data, and Orchestration. Each layer is seeing intense competition as providers race to solve agent-specific challenges that differ fundamentally from traditional SaaS infrastructure.

The Tools Layer: Making Agents Capable

The tools layer has seen the most dramatic expansion, reflecting the growing sophistication of agent interactions. Key battlegrounds include:

Browser Infrastructure & UI Automation

Agents increasingly need to interact with the visual web – not just APIs. Companies like Browserbase, Lightpanda, and Browserless are building the infrastructure to make this possible, while specialized services like Stagehand provide higher-level abstractions for common patterns.

Authentication & Security

When an agent acts on a user’s behalf, authentication and security take on new dimensions. Companies like Clerk, Anon, and Statics.ai are pioneering “auth for agents” – managing permissions, credentials, and security in agent-native ways.

Tool Discovery & Integration

As Akash Bajwa recently commented, Anthropic’s Model Context Protocol (MCP) is emerging as a potential “TCP/IP for AI agents,” offering standardized ways for agents to discover and interact with tools while maintaining context. Major players like Stripe, Neo4j, and Cloudflare already offer MCP servers, suggesting this could become a key standard for tool integration.

Companies like Composio and Arcade.dev are building layers of abstraction on top of these protocols. Composio provides MCP-compatible access to popular applications like Gmail and Linear, offering developers standardized interfaces through TypeScript and Python SDKs. Meanwhile, Arcade.dev simplifies authentication and tool management through a unified API layer compatible with OpenAI’s specifications.

This multi-layered approach is crucial because it addresses different developer needs. Infrastructure teams can build directly against protocols like MCP, while application developers can leverage managed solutions such as Composio or Arcade.dev. These approaches are not mutually exclusive; rather, they can coexist and complement each other, ultimately accelerating the adoption of agent-powered applications.

Today, discovering agent-ready tools still often relies on word of mouth, feeling much like the early days of discovering Discord servers. But the combination of standardized protocols and managed integration layers suggests we’re moving toward more structured discovery mechanisms.

The Data Layer: Memory at Scale

The data layer illustrates how traditional infrastructure must evolve for agent workloads. Neon’s experience is instructive. As mentioned above, AI agents are now creating Neon databases at 4x the rate of human developers, driving new requirements for instant provisioning, automatic scaling, and isolated environments. When Create.xyz launched its developer agent on top of Neon, Create ended up creating 20,000 new databases in just 36 hours. End users simply described what they wanted to build – “Build a job board that ranks applications using AI” or “Create a content management system that auto-generates SEO metadata” – and the agent handled all database operations automatically.

The data layer has specialized into distinct components, each serving a unique function. Memory systems, such as Mem0 and Zep, provide agent-specific context, ensuring that agents can retain and recall relevant information. Storage solutions, including both traditional databases like Neon and vector databases like Pinecone, are evolving to accommodate the demands of agent workloads. Meanwhile, ETL services are emerging to handle the processing of unstructured data, enabling more efficient data transformation and integration within agent-powered systems.

Orchestration: Managing Agent Complexity

As applications incorporate multiple agents working together, orchestration has become crucial. Managed orchestration solutions like LangGraph, CrewAI, and Letta enable developers to compose and manage multiple agents working together, streamlining complex workflows and enhancing coordination. Complementing these orchestration tools, persistence engines such as Inngest, Hatchet, and Temporal tackle the challenge of maintaining state across long-running agent processes, ensuring continuity and reliability.

Looking Ahead: The Bridge Takes Shape

A year ago, we described developers as “speeding across a half-finished bridge,” building agent applications while the infrastructure to support them was still under construction. Today, that bridge is more complete — but traffic is increasing exponentially, and we’re still adding new lanes.

The rapid evolution of AI agent infrastructure reflects a fundamental shift in how software is built. We’re seeing more than just eye-popping growth metrics. We’re watching the emergence of a new software development paradigm.

The infrastructure stack is maturing to meet that moment. From standardized protocols like MCP to specialized services for auth, memory, and orchestration, we’re seeing the emergence of patterns that will define the next generation of applications. Yet the pace of innovation means we’re still in the early chapters of this transformation.

Let’s Build Together

We are pleased to share this view of the AI agent infrastructure stack as it looks in February 2025. Our industry continues to learn together — so please let us know what we’re missing and how we can more accurately reflect developer options.

Madrona is actively investing in AI agents, the infrastructure that supports them, and the apps that rely upon them. We have backed multiple companies in this area and will continue to do so. You can reach out to directly at: [email protected]