(For a more recent AI Infrastructure market map, see Jon Turow’s story from March 2025.)

An explosion of GenAI apps is plain to see, with applications for productivity, development, cloud infrastructure management, media consumption, and even healthcare revenue cycle management. That explosion is made possible by rapidly improving models and the underlying platform infrastructure our industry has built over the past 24 months, which simplified hosting, fine-tuning, data loading, and memory — and made it easier to build apps.

As a result, the eyes of many founders and investors have turned north to the top of the stack, where we can finally start to put our most advanced technologies to work for end-users. But the blistering pace of GenAI development means few assumptions hold true for long. Apps are now being built in a new way that will impose new requirements on the underlying infrastructure. Those developers are speeding across a half-finished bridge. Their apps won’t achieve their full potential if our industry fails to support them lower in the stack with a new set of AI agent Infrastructure components.

The Rise of Agents

One key change is the rise of AI agents: autonomous actors that can plan and execute multi-step tasks. Today, AI agents — not direct prompts to the underlying model — are becoming a common interface that end-users encounter and even becoming a core abstraction that developers build upon. This is further accelerating how fast new apps can be built and is creating a new set of opportunities at the platform layer.

Starting with projects like MRKL in 2022 and ReAct, BabyAGI, and AutoGPT in 2023, developers started to find that chains of prompt and response could decompose large tasks into smaller ones (planning) and execute them autonomously. Frameworks like LangChain, LlamaIndex, Semantic Kernel, Griptape, and more showed that the agents could interact with APIs via code, and research papers like Toolformer and Gorilla showed that the underlying models could learn to use APIs effectively. Research from Microsoft, Stanford, and Tencent has shown that AI agents work even better together than they do by themselves.

Today, the word agent means different things to different people. If you speak to enough practitioners, a spectrum emerges with multiple concepts that could all be called agents. BabyAGI creator Yohei Nakajima has one great way to look at this:

- Hand-Crafted Agents: Chains of prompts and API calls that are autonomous but operate within narrow constraints.

- Specialized Agents: Dynamically decide what to do within a subset of task types and tools. Constrained, but less so than hand-crafted agents are.

- General Agents: The AGI of Agents – still at the horizon versus practical reality today.

Reasoning limitations of our most advanced frontier models (GPT-4o, Gemini 1.5 Pro, Claude 3 Opus, etc.) are the key constraint that holds back our ability to build, deploy, and rely upon more advanced agents (specialized and general). Agents use frontier models to plan, prioritize, and self-validate – that is, to decompose large tasks into smaller ones and ensure the output is correct. So modest levels of reasoning mean the agents are constrained as well. Over time, new frontier models with more advanced reasoning capabilities (GPT-5, Gemini 2, etc.) will make for more advanced agents.

Applying Agents

Today, developers say that the best-performing agents are all extremely hand-crafted. Developers are being scrappy about how to apply these technologies in their current state by figuring out which use cases work today under the right constraints. Agents are proliferating despite their limitations. End-users are sometimes aware of them, as with a coding agent that responds on Slack. Increasingly, agents also get buried under other UX abstractions such as a search box, spreadsheet, or canvas.

Consider Matrices, a spreadsheet application company formed in 2024. Matrices builds spreadsheets that complete themselves on users’ behalf, for example, by inferring what information a user wants in (say) cells A1:J100 based on the row and column titles, then searching the web and parsing web pages to find each piece of data. Matrices’ core spreadsheet UX is not so different from Excel (launched 1985) or even Visicalc (launched 1979). But the developers of Matrices can use 1,000+ agents for independent multi-step reasoning about each row, column, or even every cell.

Or consider Gradial, a marketing automation company formed in 2023. Gradial lets digital marketing teams automate their content supply chain by helping create asset variants, execute on content updates, and create/migrate pages across channels. Gradial offers a chat interface, but can also meet marketers in their existing workflows by responding to tickets in tracking systems like JIRA or Workfront. The marketer does not need to break down high-level tasks into individual actions. Instead, the Gradial agent accomplishes that and finishes the task(s) behind the scenes on the marketers’ behalf.

To be sure, agents today have lots of limitations. They are often wrong. They need to be managed. Running too many of them has implications for bandwidth, cost, latency, and user experience. And developers are still learning how to use them effectively. But readers would be right to notice that those limitations echo complaints about foundation models themselves. Techniques like validation, voting, and model ensembles reinforce for AI agents what recent history has shown for GenAI overall: developers are counting on rapid science and engineering improvements and building with a future state in mind. They are speeding across the half-finished bridge I mentioned above, under the assumption it will be finished rapidly.

Supporting Agents with Infrastructure

All of this means that our industry has work to do to build infrastructure that supports AI agents and the applications that rely upon them.

Today, many agents are almost entirely vertically integrated, without much managed infrastructure. That means: self-managed cloud hosts for the agents, databases for memory and state, connectors to ingest context from external sources, and something called Function Calling, Tool Use, or Tool Calling to use external APIs. Some developers stitch things together with software frameworks like LangChain (especially its evaluation product Langsmith). This stack works best today because developers are iterating quickly and feel they need to control their products end-to-end.

But the picture will change in the coming months as use cases solidify and design patterns improve. We are still solidly in the era of hand-crafted and specialized agents. So, the most useful infrastructure primitives in the near term are going to be the ones that meet developers where they are and let them build hand-crafted agent networks they control. That infrastructure can also be forward-looking. Over time, reasoning will gradually improve, frontier models will come to steer more of the workflows, and developers will want to focus on product and data — the things that differentiate them. They want the underlying platform to “just work” with scale, performance, and reliability.

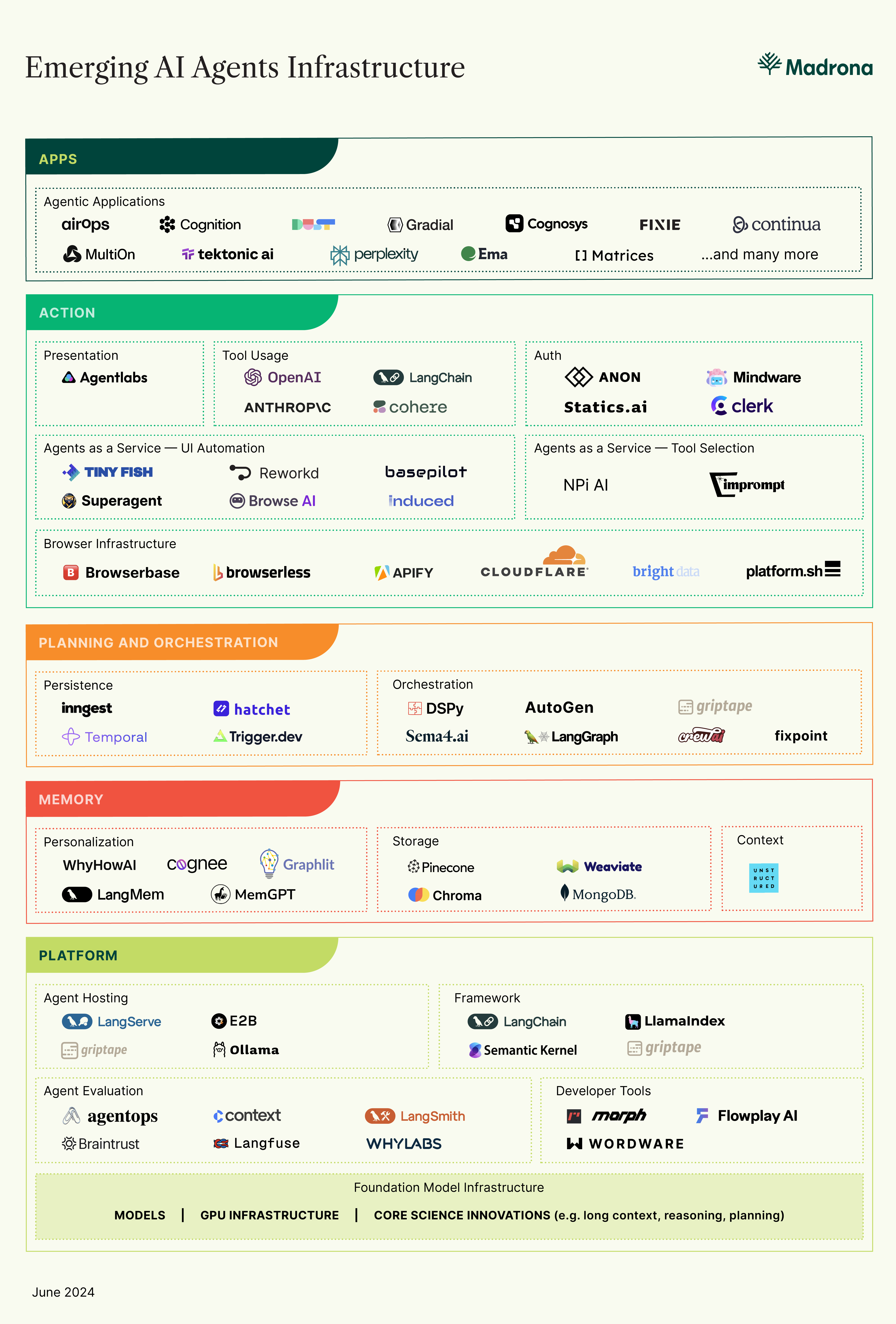

Sure enough, when you look at it this way, you can see that a rich ecosystem has already started to form that provides AI agent infrastructure. Here are some of the key themes:

Agent-specific developer tools

Tools like Flowplay, Wordware, and Rift natively support common design patterns (voting, ensembles, validation, “crews”), which will help more developers understand these patterns and put them to use to build agents. A useful and opinionated developer tool could be one of the most important pieces of infrastructure that unblocks the next wave of applications based on this powerful agent technology.

Agents as a service

Hand-crafted agents for specific tasks are starting to act as infrastructure that developers can choose to buy versus build. Such agents offer opinionated functionality like UI automation (Tinyfish, Reworkd, Firecrawl, Superagent, Induced, and Browse.ai), tool selection (NPI, Imprompt), and prompt creation and engineering. Some end-customers may apply those agents directly, but developers will also access those agents via API and assemble them into broader applications.

Browser Infrastructure

Reading the web and acting upon it is a key priority. Developers make their agents richer by letting them interact with APIs, SaaS applications, and the web. API interfaces are straightforward enough, but websites and SaaS applications are complex to access, navigate, parse, and scrape. Doing so makes it possible to use any web page or web app as they would use an API to access its information and capabilities in structured form. That requires managing connections, proxies, and captchas. Browserbase, Browserless, Apify, Bright Data, Platform.sh, and Cloudflare Browser Rendering are all examples of companies that have products in this area.

Personalized Memory

When agents distribute tasks across multiple models, it becomes important to provide shared memory and ensure each model has access to relevant historical data and context. Vector stores like Pinecone, Weaviate, and Chroma are useful for this. But a new class of companies with complementary, opinionated functionality exist, including WhyHow and Cognee, a feature of LangChain called LangMem, and a popular open-source project called MemGPT. These companies show how to personalize agent memory for an end-user and that user’s current context.

Auth for Agents

These agents manage authentication and authorization on behalf of the agents as they interact with external systems on the end-user’s behalf. Today, developers are using OAuth tokens to let agents impersonate the end-users (delicate), and, in some cases, even asking users to provide API keys. But the UX and security implications are serious, and not all the web supports Oauth (this is why Plaid exists in Financial Services). Anon.com, Mindware, and Statics.ai are three examples of what developers are going to want at scale: managed authentication and authorization for the agents themselves.

“Vercel for Agents”

Seamlessly manage, orchestrate, and scale the hosting of agents with a distributed system. Today there is a disparate set of primitives for agent hosting (E2b.dev, Ollama, Langserve), persistence (Inngest, Hatchet.run, Trigger.dev, Temporal.io), and orchestration (DSPy, AutoGen, CrewAI, Sema4.ai, LangGraph). Some platforms (LangChain and Griptape) offer managed services for different combinations of these things. A consolidated service that offers scalable, managed hosting with persistence and orchestration on an app developer’s behalf will mean developer no longer have to think at multiple levels of abstraction (app and agent) and can instead focus on the problem they wish to solve.

Building the Future of AI Agent Infrastructure

It is so early in the evolution of AI agent infrastructure that today, we see a mix of operational services and open-source tools that have yet to be commercialized or incorporated into broader services. And it’s far from clear who the winners will be — in this domain, the endgame winners may be young today or may not yet exist. So let’s get to work.

We are pleased to share a view of the emerging AI agent infrastructure stack as it looks in June 2024. Our industry is still learning together — so please let us know what we’re missing and how we can more accurately reflect developer options. Madrona is actively investing in AI agents, the infrastructure that supports them, and the apps that rely upon them. We have backed multiple companies in this area and will continue to do so. We would love to meet you if you’re building AI agent infrastructure, or applications that rely on it. You can reach out to us directly at: jonturow@madrona.com